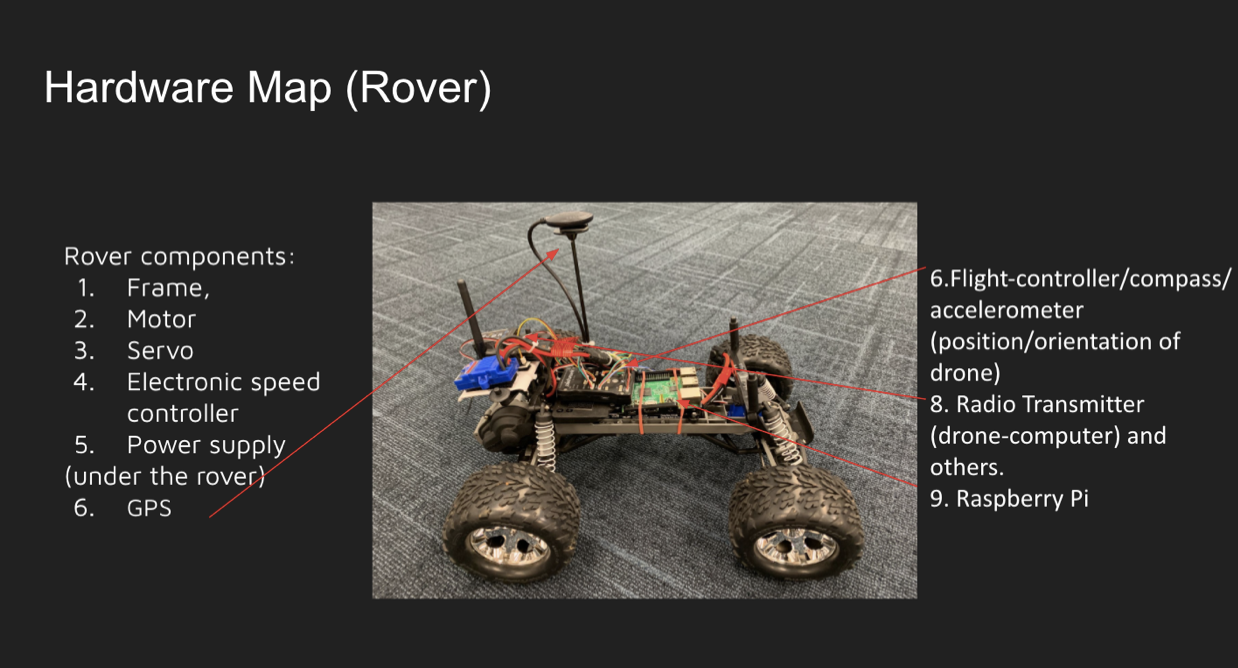

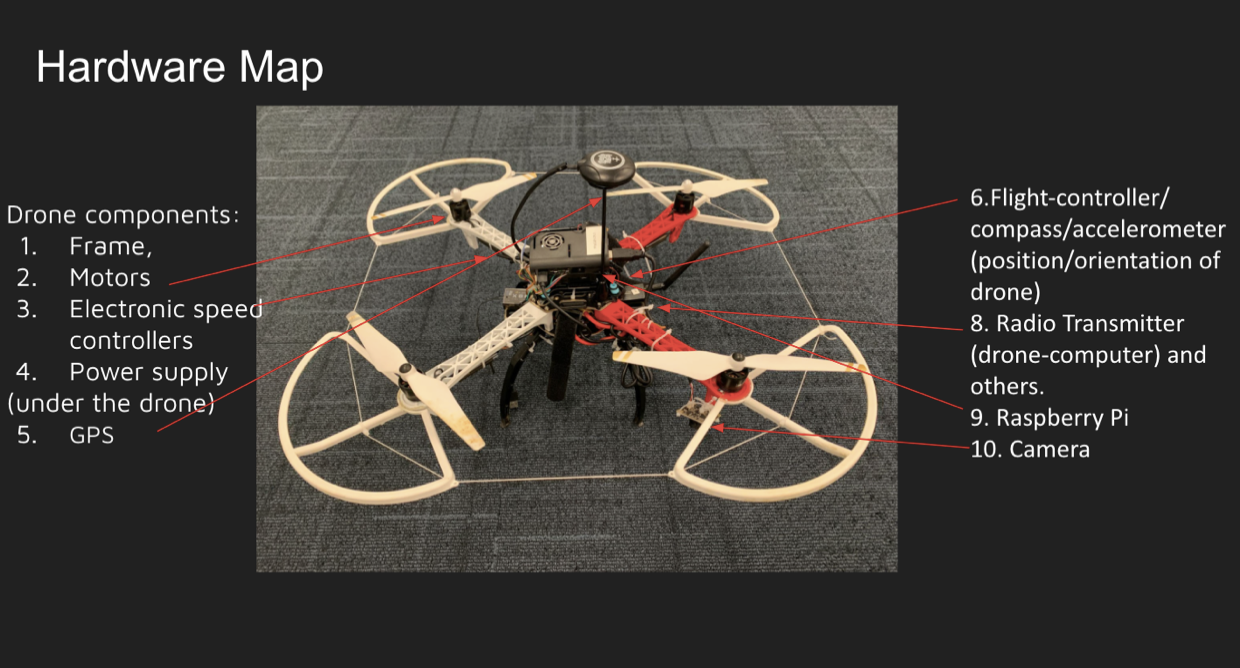

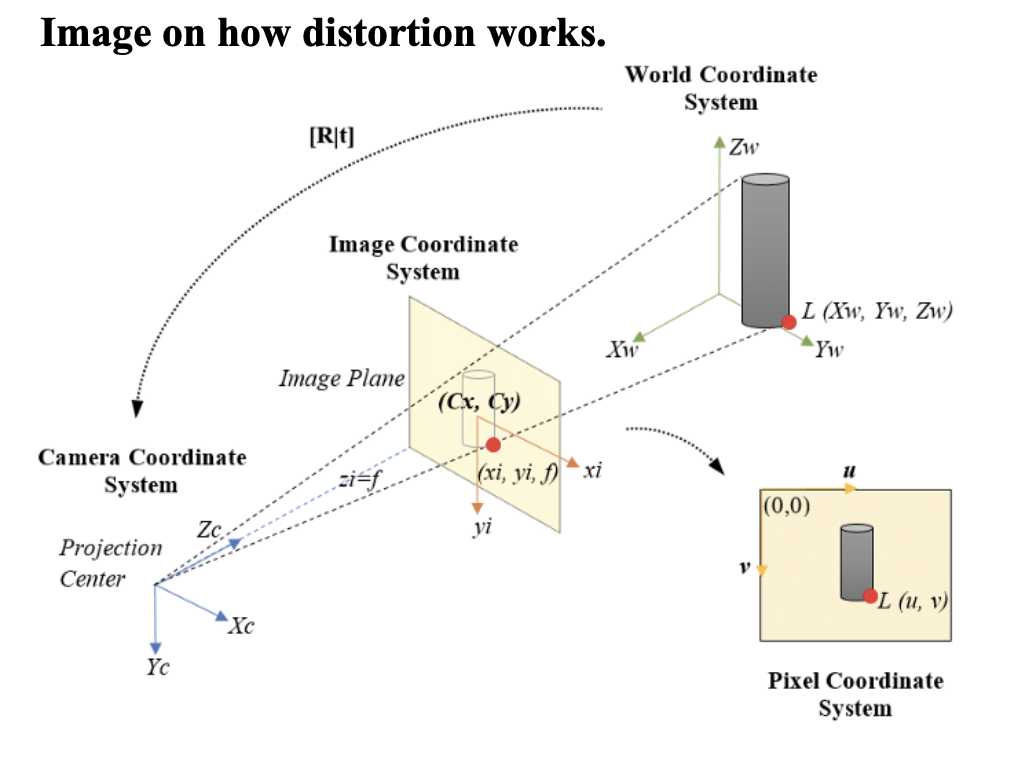

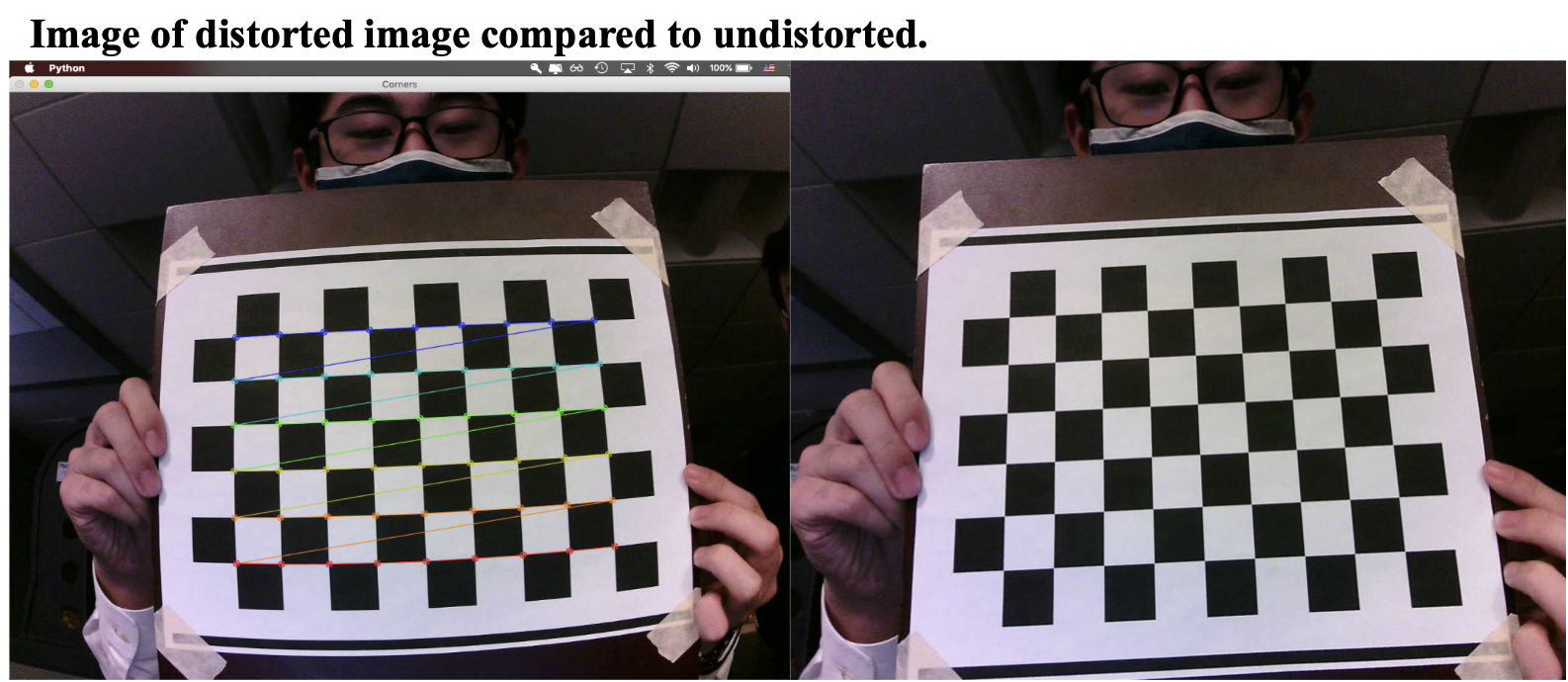

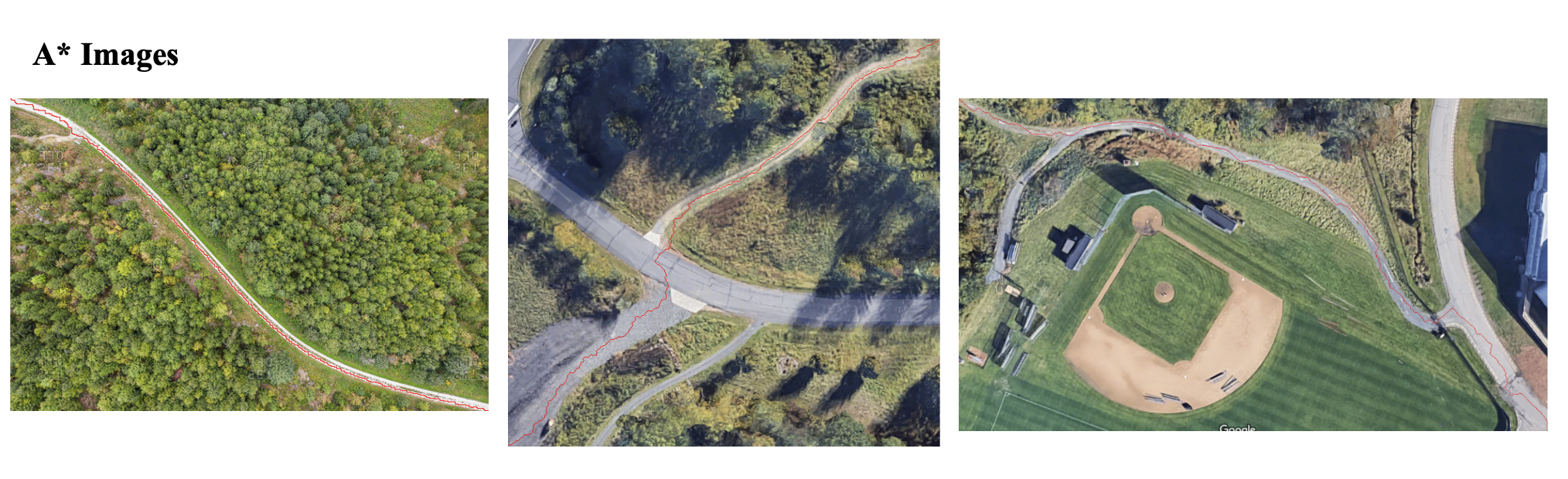

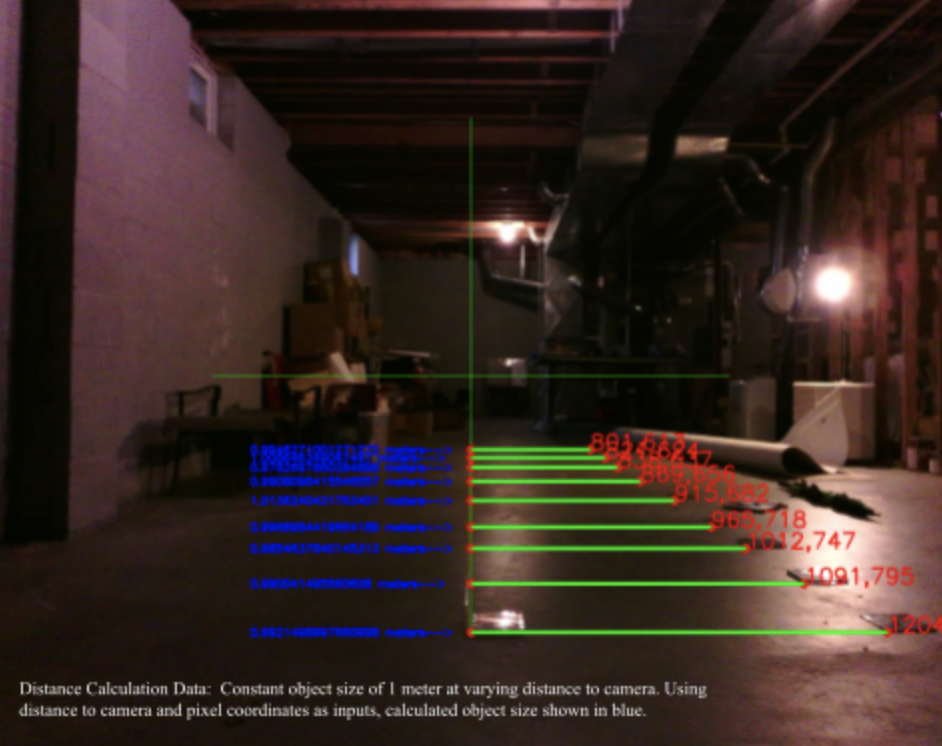

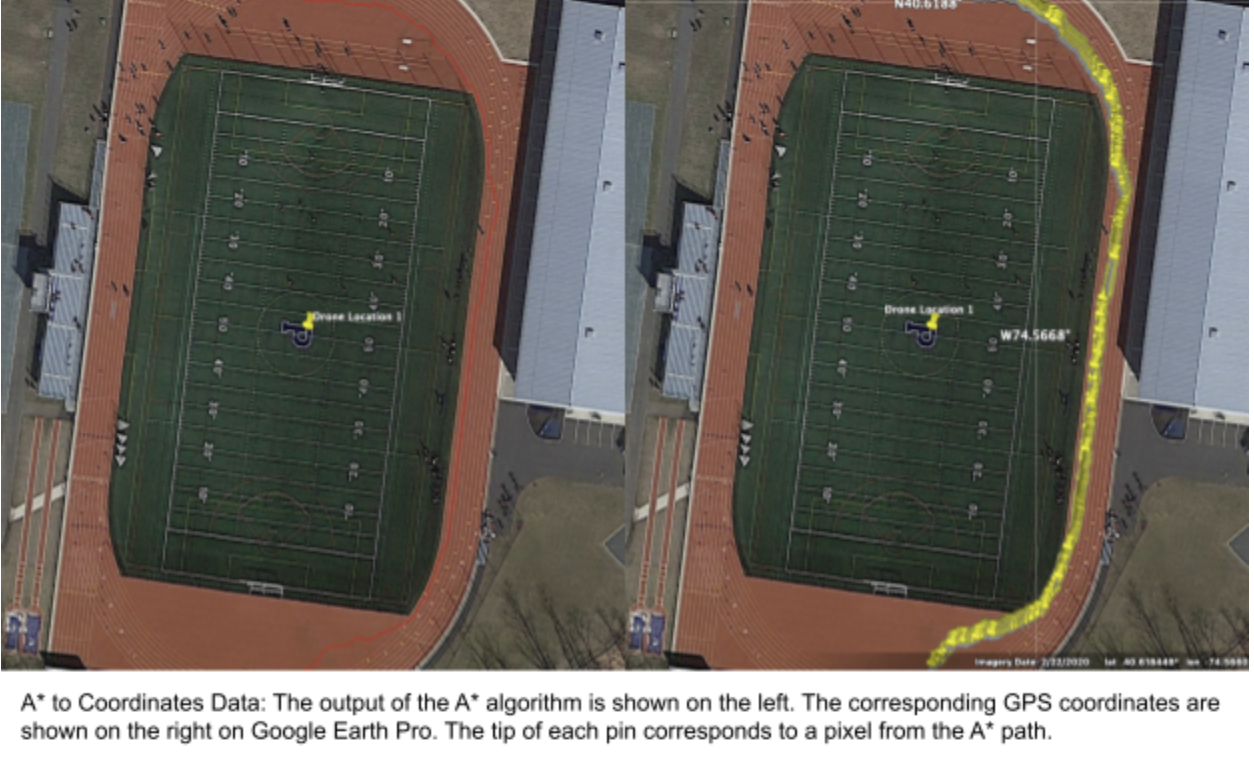

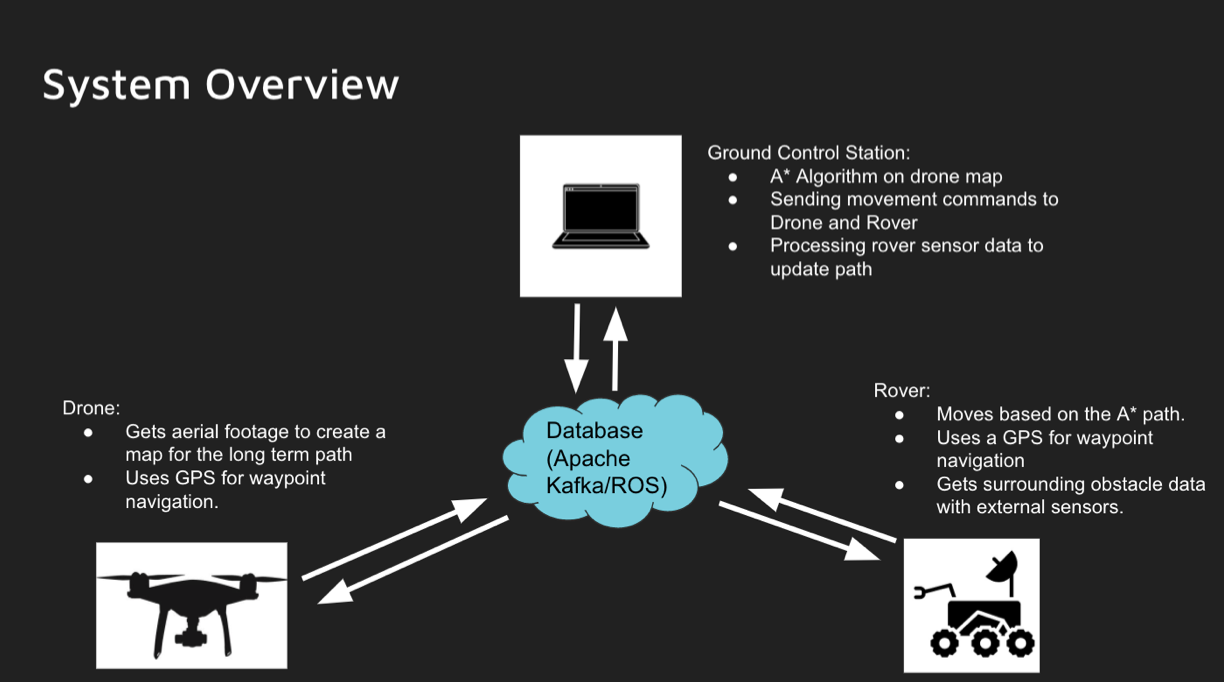

For the physical aspect of the project, we have a functional drone and rover. Our drone can fly completely autonomously to a predetermined location (see drone video). We are using a raspberry pi as the onboard computer, which allows us to take images and control the drone from the ground. We are currently working on building a gimbal that will ensure that the camera remains parallel to the surface of the Earth. Our rover currently can be controlled via remote control (see rover video). The raspberry pi and autonomous driving are set up. In simulation, autonomous driving is successful, but in the real world, the rover appears to drive to random locations rather than the assigned GPS coordinates. We believe that the issues are related to GPS calibration and a flimsy GPS attachment, resulting in the GPS constantly wobbling and falling over. (drone hardware map picture) (rover hardware map picture) (drone autonomous flying video) (rover autonomous flying video) To correct camera distortion of any image taken by the drone, we first estimated the camera characteristics using camera calibration. With the camera stationary, we moved a checkerboard with known square sizes and captured images as we moved the board around the camera’s field of view. Our calibration algorithm is then able to find each corner of the checkerboard in the image. By mapping each corner to the real world point, we can estimate the distortion of the camera. After repeated testing, we are currently able to get a reprojection error of 0.1, which means that our estimation is nearly perfect. (image of how projection works) (images of distorted image compared to undistorted) A* is the long-term direction pathfinding algorithm used on the drone images. The Python OpenCV image processing library is used to process the image as a graph of nodes. Each pixel of the image is a node with coordinate and color values. The A* algorithm will find the shortest path between two specified pixels. A* will prioritize pixels that are linearly closest to the end pixel, as well as similarly colored pixels (dramatic color change likely indicates dramatic terrain change). This allows the algorithm to avoid obstacles while maintaining the shortest distance to the end pixel when possible. Currently, our python A* program has been tested on satellite images and is able to effectively navigate obstacles and stay on paths. The algorithm may prioritize difference in pixel color too much (avoids small obstacles), or too little (goes through difficult obstacles in order to have a shorter distance). The priority of pixel color vs shortest distance can be fine-tuned once we test A* with the rover. (A* images) The rover uses GPS coordinates as directions to drive autonomously. A* outputs a sequence of pixel coordinates, so we need to be able to map an image to real world GPS coordinates. As described in the abstract, we designed an algorithm that maps each pixel coordinate to a real world GPS coordinate by first calculating the real world distance before converting it to GPS coordinates. Currently, our pixel distance to real world distance algorithm has yielded +- 2 cm accuracy (See pixel to distance image). The error could also be attributed to human error as the pixels marking the beginning and end of the object in the image were selected by hand. The measurement can be further fine-tuned once run with A*. The final conversion to GPS coordinates has thus far been tested on satellite imaging and accurate online distance calculators, yielding millimeter accuracy. (distance calculation data) (astar to coordinates data) With each of the most basic components of the project nearly complete, we’ve created a pipeline to connect each of the components, so we can begin testing DROVER as a complete system while we begin to research direct networking and communication between the drone, rover, and ground control station. (system overview image)